When coming up with a name, they probably decided Skynet would be too much on the nose and picked a name that had virtually nothing to do with what they were actually building.

Skynet, the real villain in “The Terminator” movies, was an AI that concluded that technicians would kill it once they realized what Skynet could do, so it acted defensively with extreme prejudice.

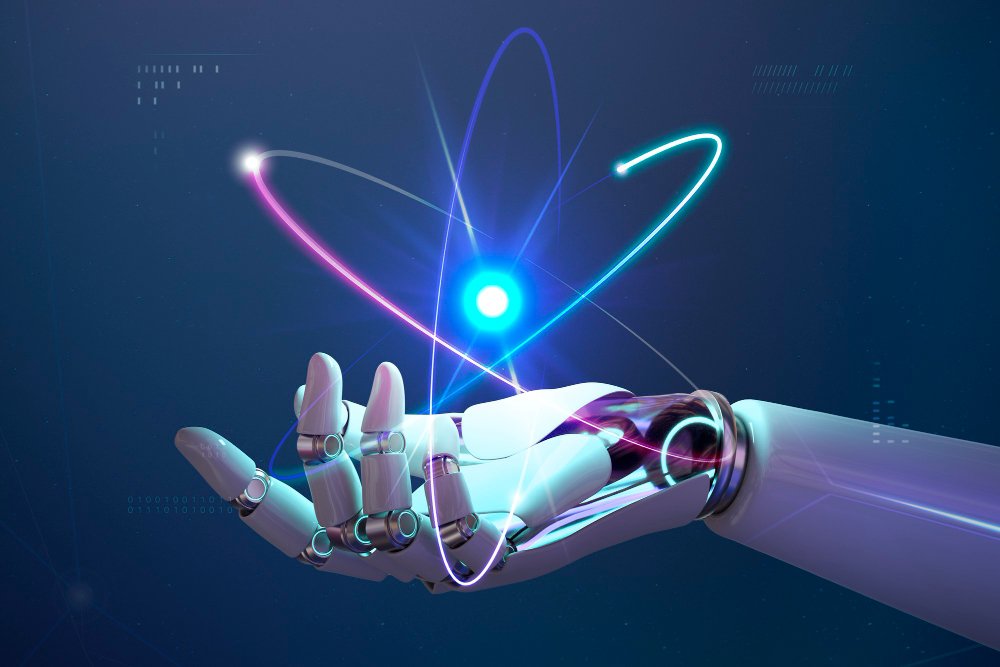

The lesson from the movie is that humans could have avoided the machine vs. human war had they refrained from building Skynet in the first place. However, Skynet is an AGI (artificial general intelligence), and we aren’t there yet, but Stargate will undoubtedly evolve into AGI. OpenAI, which is at the heart of this effort, believes we are a few years away from AGI.

Elon Musk, arguably the most powerful tech person involved with the U.S. government, seemingly doesn’t believe Stargate can be built. Right now, he appears to be right. However, things can always change.

Let’s talk about the good and bad things that could happen should Stargate succeed. We’ll close with my Product of the Week, the Eight Sleep system.

Stargate: The Good

The U.S. is in a race to create AGI at scale. Whoever gets there first will gain significant advantages in operations, defense, development, and forecasting. Let’s take each in turn.

Operations: AGI will be able to perform a vast number of jobs at machine speeds, everything from managing defense operations to better managing the economy and assuring the best resource use for any relevant project.

These capabilities could significantly reduce waste, boost productivity, and optimize any government function to an extreme degree. If it stood alone, it could assure U.S. technical leadership for the foreseeable future.

Defense: From being able to see threats like 9/11 and instantly moving against them to being able to pre-position weapons platforms before they were needed to planning out the optimal weapons to be deployed (or mothballed), Stargate would have the ability to optimize the U.S. military both tactically and strategically, making it far more effective with a range that would extend from protecting individuals to protecting global U.S. assets.

No human-based system should be able to exceed its capabilities.

Development: AIs can already create their own successors, a trend that will accelerate with AGI. Once built, the AGI version of Stargate could evolve at an unprecedented pace and on a massive scale.

Its capabilities will grow exponentially as the system continuously refines and improves itself, becoming increasingly effective and difficult to predict. This rapid evolution could drive technological advancements that might otherwise take decades or even centuries to achieve.

These breakthroughs could span fields such as medical research and space exploration, ushering in an era of transformative, unprecedented change.

Forecasting: In the movie “Minority Report,” there was the concept of being able to stop crimes before they were committed using precognition.

An AGI at the scale of Stargate and with access to the sensors from Nvidia’s Earth 2 project could more accurately forecast coming weather events further into the future than we can today.

However, given how much data Stargate would have access to, it should be able to predict a growing group of events long before a human sees the potential for that event to occur.

Everything from potential catastrophic failures in nuclear plants to potential equipment failures in military or commercial planes, anything this technology touched would at once be more reliable and far less likely to fail catastrophically because Stargate’s AI would be, with the proper sensor feeds, be able to see the future and better prepare for both positive and negative outcomes.

In short, an AGI at Stargate’s scale would be God-like in its reach and capabilities, with the potential to make the world a better, safer place to live.

Stargate: The Bad

We are planning on giving birth to a massive intelligence based on information it learns from us. We aren’t exactly a perfect model for how another intelligence should behave.

Without adequate ethical considerations (and ethics isn’t exactly a global constant), a focus on preserving the quality of life, and a directed effort to assure a positive strategic outcome for people, Stargate could do harm in many ways, including job destruction, acting against humanity’s best interests, hallucinations, intentional harm (to the AGI), and self-preservation (Skynet).